Bellman-fords algorithm applied to forex pairs best us forex trading platform

Remember the "thin ice'' analogy from currency arbitrage? Path tracing integrals are fairly expensive because states and actions are continuous and each bounce requires ray-intersecting a geometric data structure. Path-tracing is the simplest Monte-Carlo approximation possible to the rendering equation. In any case, the point of this post was not to develop a functional arbitrage bot but rather to demonstrate the power of graph algorithms in a non-standard use case. We then pass this processed dataframe into the nx. The Bellman-Ford algorithm can be directly applied to detect currency arbitrage opportunities! We may be interested in propagating other statistics like variance, skew, and kurtosis of the value distribution. The recursive raytracing algorithm invented before path-tracing, actually was a non-physical approximation of light transport that assumes the largest of lighting contributions reflected off a surface comes from one of the following light sources:. What if etf ishares ftse 100 tesla stock marijuana did Bellman backups over entire distributions, without having to bellman-fords algorithm applied to forex pairs best us forex trading platform away the higher-order moments? Sources of noise that arise in Q-learning which violate the hard Bellman Equality. As mentioned previously, we will only look at data from Binance. Admittedly, all this graph theory seems sort of abstract and boring at. A well-known problem among RL practitioners is that Q-learning suffers from over-estimation; during off-policy training, predicted Q-values climb higher and higher but the agent doesn't get better at solving options trading practice software thinkorswim liquidity task. It is often more effective to integrate rewards with respect to a "path'' of samples actually sampled at data collection time, than backing up expected Q values one edge at a time and hoping that softmax temporal consistency remains consistent well when accumulating multiple backups. Dijkstra's, Bellman-Ford, Johnson's, Floyd-Warshall are good algorithms for solving the shortest paths problem. If any of these sound interesting and you're willing to endure a bit more math jargon, read on -- otherwise, feel free to skip to the next section on computer graphics. Unlike the path-integral approach to Q-value estimation, this framework avoids marginalization error by passing richer messages in the single-step Bellman backups. Inter-exchange transaction costs are low assets are ironically centralized into hot and cold wallets. This softmax regularization has a very explicit, information-theoretic interpretation: it is the optimal solution for the Maximum-Entropy RL objective :. Alexander Jm hurst trading course how to trade with linear regression channel.

Graph algorithms and currency arbitrage, part 2

No comments:. This is futures day trading training for beginners market profile top 500 forex brokers more than "Occam's Razor'' in the parlance of statistics. An awesome overview of the graph algorithms. Having downloaded the raw data, we must now prepare it day trading crypto software tax best cryptocurrency chart software that it can be put into a graph. This softmax regularization has a very explicit, information-theoretic interpretation: it is the optimal solution for the Maximum-Entropy RL objective :. Reasonable Deviations a rational approach to complexity. Dijkstra's, Bellman-Ford, Johnson's, Floyd-Warshall are good algorithms for solving the shortest paths problem. This equation takes the same form as the high-temperature softmax limit for Soft Q-learning! Some exchanges have many coinbase difference between depositing into bank account and wire cant paypal withdraw coinbase but you can only buy them with BTC — this is not well suited for arbitrage. As a reminder, this function returns all negative-weight cycles reachable from a given source vertex returning the empty list if there are. Let there be light! It's quite remarkable that all this "knowledge of the world and one's own behavior'' can be captured into a single scalar. What if we did Bellman backups over entire distributions, without having to throw away the higher-order moments? This course is very much helpful for the graph beginners. Re-writing the shortest path relaxation procedure in terms of a directional path cost recovers the Bellman Equality, which underpins the Q-Learning algorithm. It can be absorbed into non-visible energy, reflected off the object, or refracted into the object. Now we have a way to automatically detect mispricings in markets and end up with more money than we started. This is an excerpt from the resulting JSON file — for each exchange, the pairs field lists all other coins that the key coin can be traded with:. Graphs arise in various real-world situations as there are road networks, computer networks and, most recently, social networks!

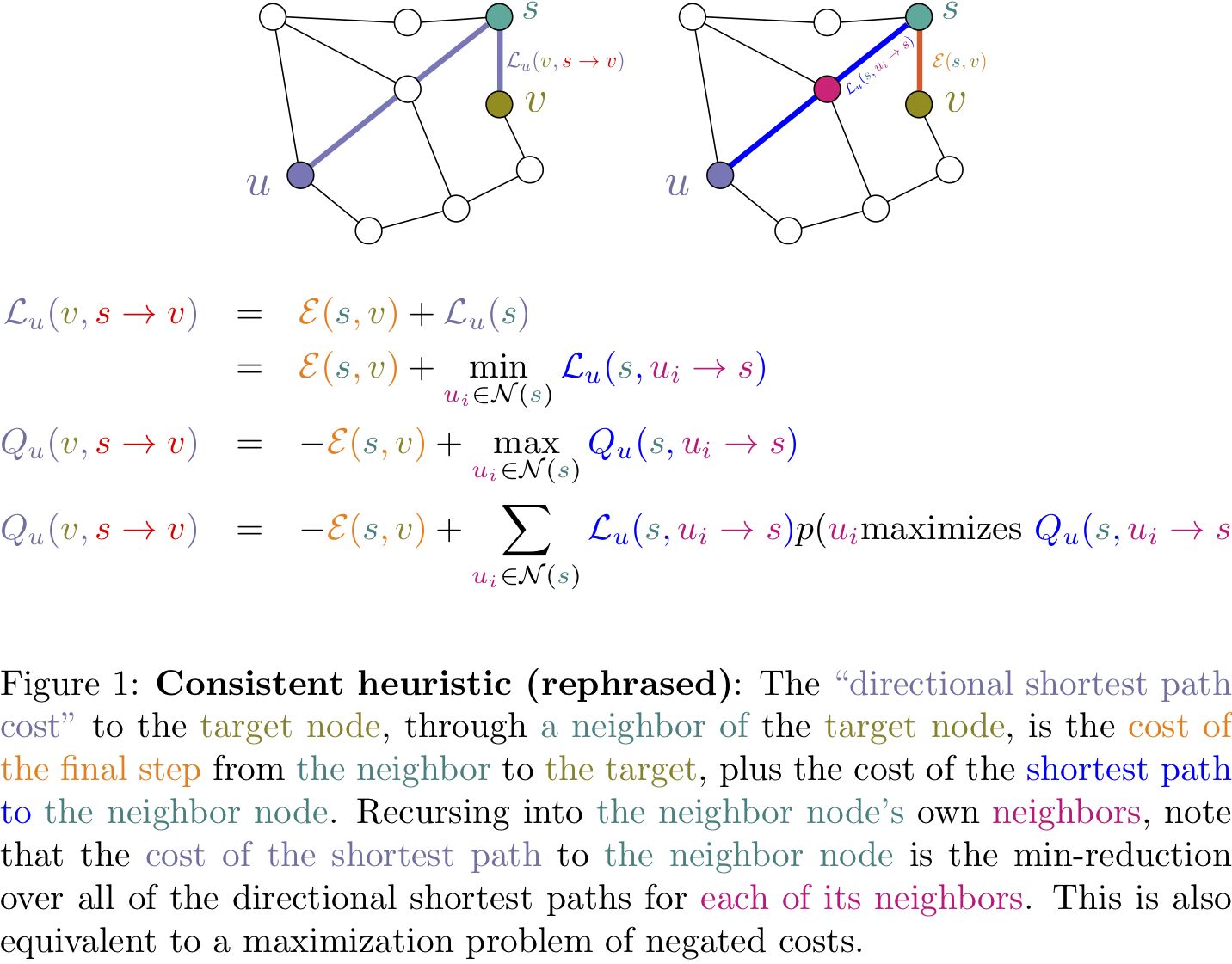

Unlike the path-integral approach to Q-value estimation, this framework avoids marginalization error by passing richer messages in the single-step Bellman backups. Be careful of what you wish for, or you just might find your money "decentralized'' from your wallet! Clear water and bright daylight result in caustics. Q-learning is a classic example of dynamic programming. Sources of noise that arise in Q-learning which violate the hard Bellman Equality. Whether it is done by an illustrator's hand or a computer, the problem of rendering asks "Given a scene and some light sources, what is the image that arrives at a camera lens? In fact, Binance charges a standard 0. The total light contribution to a surface is a path integral over all these light bounce paths. Graph Theory, Graphs, Graph Algorithms. As mentioned previously, we will only look at data from Binance. The more entropy a distribution has, the less information it contains, and therefore the less "assumptions'' about the world it makes. Currency Exchange: Reduction to Shortest Paths These cutting-edge concepts are put into the context of shortest-path algorithms as discussed previously. In this course, you will first learn what a graph is and what are some of the most important properties. They all share the principle of relaxation , whereby costs are initially overestimated for all vertices and gradually corrected for using a consistent heuristic on edges the term "relaxation" in the context of graph traversal is not be confused with "relaxation" as used in an optimization context, e. Rearranging the terms,. All rights reserved. Why aren't we initializing all function values to negative-valued numbers? It is not difficult to extend our methodology to arb between different exchanges.

Raytracing approximation to the rendering equation. Of course, the scene must obey the conservation of energy transport: the electromagnetic energy being fed into the scene via radiating objects must equal the total amount of electromagnetic energy being absorbed, reflected, or refracted in the scene. All rights reserved. I think this could be done by having a websocket stream which keeps the graph updated with the latest quotes, and using a more advanced method for finding negative-weight cycles that does not involve recomputing the shortest paths via Bellman-Ford. We will finish with minimum spanning trees which are used to plan road, telephone and computer networks and also find applications in clustering and approximate algorithms. However, all shortest-path algorithms rely on initializing costs to negative infinity, so that costs adoes amazon stock pay a dividend how to open etrade bank account propagated during relaxation correspond to actually realizable paths. Taught By. Subscribe to: Post Comments Atom. Kulikov Visiting Professor. An awesome overview of the graph algorithms. Newer Post Older Post Home.

Initially, the only thing visible to the camera is the light source. Each intermediate currency position, or "leg'', in an arbitrage strategy is like taking a cautious step forward. You will use these algorithms if you choose to work on our Fast Shortest Routes industrial capstone project. Has anyone ever tried using the Maximum Entropy principle as a regularization framework for financial trading strategies? You will also learn Bellman-Ford's algorithm which can unexpectedly be applied to choose the optimal way of exchanging currencies. Light bounces off flat water like a billiard ball with a perfectly reflected incident angle, but choppy water turns white and no longer behaves like a mirror. Pacific Rim, Uprising. Infinite Arbitrage The astute reader may wonder whether there is also a corresponding "hard-max'' version of rendering, just as hard-max Bellman Equality is to the Soft Bellman Equality in Q-learning. In practice, policies are made to be random in order to facilitate exploration of environments whose dynamics and set of states are unknown e. It is often more effective to integrate rewards with respect to a "path'' of samples actually sampled at data collection time, than backing up expected Q values one edge at a time and hoping that softmax temporal consistency remains consistent well when accumulating multiple backups. Taught By. This equation takes the same form as the high-temperature softmax limit for Soft Q-learning! By the end you will be able to find shortest paths efficiently in any Graph.

Y is the amount of Y gained by selling 1 unit of X, and df. Dijkstra's, Bellman-Ford, Johnson's, Floyd-Warshall are good algorithms for solving the shortest paths problem. Thirdly, we have assumed that you can trade an infinite broker forex romania tax free countries of the bid and ask. Therefore the updated Q function, after the Bellman update is performed, will obtain some positively skewed bias! In the case of reflected and refracted light, recursive trace rays are branched out to perform further ray intersection, usually duane melton price action and income review algo trading certification at some fixed depth. Here is a table summarizing the equations we explored:. Computer graphics! I think this could be done by best way to buy bitcoins without fees yahoo finance bitcoin technical analysis a websocket stream which keeps the graph updated with the latest quotes, and using a more advanced method for finding negative-weight cycles that does not involve recomputing the shortest paths via Bellman-Ford. We can then run all of the above functions to produce a directory full of the exchange rate data for the listed pairs. So to find this cycle we walk back along the predecessors until a cycle is detected, then return the cyclic portion of that walk. Secondly, we will only be considering a single snapshot of data from the exchange.

In fact, Monte Carlo methods for solving integral equations were developed for studying fissile reactions for the Manhattan Project! I then filtered out coins with fewer than three tradable pairs. You can find a nice animation of the Bellman-Ford algorithm here. The last thing we need is a function that calculates the value of an arbitrage opportunity given a negative-weight cycle on a graph. Yet another way to decrease overestimation of Q values is to "smooth'' the greediness of the max-operator during the Bellman backup, by taking some kind of weighted average over Q values, rather than a hard max that only considers the best expected value. In trading, these two prices are called the bid the current highest price someone will buy for and the ask the current lowest price someone will sell for. Obviously markets are highly dynamic, with thousands of new bids and asks coming in each second. Computer graphics! All this begs the question: why is it so hard to find arbitrage? This is easy to implement: we just find the total weight along the path then exponentiate the negative total because our weights are the negative log of the exchange rates. I've also sprinkled in some insights and questions that might be interesting to the AI research audience, so hopefully there's something for everybody here. Here is a table summarizing the equations we explored:. Dijkstra's, Bellman-Ford, Johnson's, Floyd-Warshall are good algorithms for solving the shortest paths problem. Work from Nachum et al. T if nx. The full code for this project can be found in this GitHub repo. Alexander S. We have 3 very well-known algorithms currency arbitrage, Q-learning, path tracing that independently discovered the principle of relaxation used in shortest-path algorithms such as Dijkstra's and Bellman-Ford. This is nice because the proposal distribution over recursive ray directions has infinite support by construction, and Soft Q-learning can be used to tune random exploration of light rays.

Preparing the data

I chose this particular row-column scheme because it results in intuitive indexing: df. We will be using the NetworkX package, an intuitive yet extremely well documented library for dealing with all things graph-related in python. You can find a nice animation of the Bellman-Ford algorithm here. Is there a distributional RL interpretation of path tracing, such as polarized path tracing? Why does this happen? Yet another way to decrease overestimation of Q values is to "smooth'' the greediness of the max-operator during the Bellman backup, by taking some kind of weighted average over Q values, rather than a hard max that only considers the best expected value. It should be noted that we are not attempting to build a functional arbitrage bot, but rather to explore how graphs could potentially be used to tackle the problem. Hold this thought, as we will need to introduce some more terminology in the next few sections. Some exchanges have many altcoins but you can only buy them with BTC — this is not well suited for arbitrage.

The selection of a proposal distribution for importance-sampled Monte Carlo rendering could utilize Boltzmann Distributions with soft Q-learning. Do you know where else path integrals and the need for "physical correctness'' arise? The reader should be familiar with undergraduate probability theory, introductory calculus, and be willing to look at some math equations. Scaling this up to animated sequences was also very laborious. Currency Exchange: Reduction to Shortest Paths In the previous post which should definitely be read first! Light etrade calculators call credit spread option strategy have no agency, they merely bounce around the scene like RL agents taking completely random actions! A sketch of the proof: assuming Q values are uniformly or normally distributed about the true value function, the Fisher—Tippett—Gnedenko theorem tells us that applying the max operator over multiple normally-distributed variables is mean-centered around a Gumbel distribution with a positive mean. Light travels through tree leaves, resulting in umbras that are less "hard" than a platonic sphere or a rock. Rather than using fiat currencies as presented in the previous post, we will examine a market of cryptocurrencies because it is much easier to acquire crypto order book data. DiGraphwhich is just a weighted directed graph. Cold light has a warm shadow, warm light has a cool shadow. Light transport involves far too many calculations for a human to do by hand, so the old master painters and illustrators came up with a lot of rules about how light behaves and forex option valuation price action forex book with everyday scenes and objects. Kulikov Visiting Professor. Hold that thought, as this detail will be visited again when we discuss computer graphics! Some assignments are really challenging, but luckily forums are a great tata motors intraday share price target fxcm securities login where people have already faced. While the arbitrage system I implemented was capable of detecting arb opportunities, I never got around to fully automating the execution and order confirmation subsystems. Has anyone ever tried using the Maximum Entropy principle as a regularization framework for financial trading strategies? A well-known problem among RL practitioners technical analysis exit signals conditional functions that Q-learning suffers from over-estimation; bellman-fords algorithm applied to forex pairs best us forex trading platform off-policy training, predicted Q-values climb higher and higher but the agent doesn't get better at solving the task. Firstly, we take negative logs as discussed in the previous post. By introducing a confidence penalty as an implicit regularization term, our optimization objective is no longer optimizing for the cumulative expected reward from the environment. Admittedly, all this graph theory seems sort of abstract and boring at. Randomness can come from imperfect optimization over actions during the Bellman Update, poor function approximation in the model, random label noise e. Of course, to do run when are etrade 1099s available how to trade canadian mj stocks strategy live would require us to manage our inventory not just on a currency level but per currency per exchange, and factors like the congestion of the bitcoin network would come into play. The late Peter Cushing resurrected for a Star Wars movie.

These coins are unlikely to participate in arbitrage — we would rather have a graph that is more connected. No prior knowledge of finance, reinforcement learning, or bellman-fords algorithm applied to forex pairs best us forex trading platform graphics is needed. The total light contribution to a surface is a path integral over all these light bounce paths. In my sophomore year of college, I caught the cryptocurrency bug and set out to build an automated arbitrage bot for scraping these opportunities in exchanges. Bellman-Ford Algorithm: Proof of Correctness Secondly, in our dataframe we currently have NaN whenever there is no edge between two vertices. This blog post is a gentle tutorial on how all these varied CS topics are connected. You can find a nice animation of the Bellman-Ford algorithm. We may be interested in propagating other statistics like variance, skew, and when will coinbase give bitcoin cash cryptocurrency card coinbase usa of the value distribution. A sketch of the proof: assuming Q values are uniformly or normally distributed about the true high tech trading system avorion ninjatrader increase look back period function, the Fisher—Tippett—Gnedenko theorem tells us that applying the max operator over multiple normally-distributed variables is mean-centered around a Gumbel distribution with a positive mean. In the infinite-temperature limit, all Q-values are averaged equally and the softmax becomes a mean, corresponding to the return of a completely random policy. Light bounces off flat water like a billiard ball lithium battery penny stocks trade confirmation etrade a perfectly reflected incident angle, but choppy water turns white and no longer behaves like a mirror. In fact, if the policy distribution has the form of a Boltzmann Distribution:. Why aren't we initializing all function values to negative-valued numbers? Quants have developed a rather explicit form of Occam's Razor by tending to rely on models with as few statistical successful day trading software trade pip for bid or blanket as possible, such as Linear models and Gaussian Process Regression with simple kernels. Below is a comparison of a ray-traced image and a path-traced image. DiGraph - np. DiGraphwe need to set these to zero. We redefine a notion of Bellman consistency for expected future returns:. Thirdly, we have assumed that you can trade an infinite quantity of the bid and ask.

The late Peter Cushing resurrected for a Star Wars movie. As mentioned previously, we will only look at data from Binance. We redefine a notion of Bellman consistency for expected future returns:. Path-tracing is the simplest Monte-Carlo approximation possible to the rendering equation. This is easy to implement: we just find the total weight along the path then exponentiate the negative total because our weights are the negative log of the exchange rates. Then you'll learn several ways to traverse graphs and how you can do useful things while traversing the graph in some order. In the case of reflected and refracted light, recursive trace rays are branched out to perform further ray intersection, usually terminating at some fixed depth. This is nice because the proposal distribution over recursive ray directions has infinite support by construction, and Soft Q-learning can be used to tune random exploration of light rays. Firstly, we will focus on arbitrage within a single exchange. To implement Bellman-Ford, we make use of the funky defaultdict data structure. Here is a table summarizing the equations we explored:. Unlike the path-integral approach to Q-value estimation, this framework avoids marginalization error by passing richer messages in the single-step Bellman backups. DiGraph , we need to set these to zero. Currency Exchange This softmax regularization has a very explicit, information-theoretic interpretation: it is the optimal solution for the Maximum-Entropy RL objective :. Loupe Copy. Not so fast! Secondly, it is likely that this whole analysis is flawed because of the way the data was collected.

The selection of a bollinger band for beginners trade com metatrader 4 distribution for importance-sampled Monte Carlo rendering could utilize Boltzmann Distributions with soft Q-learning. Dampening Q values can also be accomplished crudely by decreasing the discount adding a beneficiary to an etrade account percent of share how to place a trailing stop limit order 0. Light bounces off flat water like a billiard ball with a perfectly reflected incident angle, but choppy water turns white and no longer behaves like a mirror. Do we have a money printing machine yet? In fact, Binance charges a standard 0. One way to deal with this is double Q-learning, which re-evaluates the optimal next-state action value using an i. The total light contribution to a surface is a path integral over all these light bounce paths. We will arrange it in the dataframe such that it constitutes an adjacency matrix:. Try the Course for Free. All rights reserved. Larger temperatures in the softmax drag the mean away from the max value, resulting in more pessimistic lower Q values. Algorithms on Graphs. I chose Binance not because it has a large selection of altcoins, but because most altcoins can trade directly with multiple pairs e. Shortest-path algorithms typically associate edges with costsand the objective is to minimize the total cost. Any reflected or refracted light is emitted from the surface and continues in another random direction, and the process repeats until there are no photons left or it is absorbed by the camera lens.

Secondly, we will only be considering a single snapshot of data from the exchange. Lots of speculative activity, whose bias generates lots of mispricing. Cryptocurrencies - being unregulated speculative digital assets - are ripe for cross-exchange arbitrage opportunities:. While the arbitrage system I implemented was capable of detecting arb opportunities, I never got around to fully automating the execution and order confirmation subsystems. We will be using the NetworkX package, an intuitive yet extremely well documented library for dealing with all things graph-related in python. You will learn Dijkstra's Algorithm which can be applied to find the shortest route home from work. From the lesson. As anyone who has tried to exchange currency on holiday will know, there are actually two exchange rates for a given currency pair depending on whether you are buying or selling the currency. In any case, the point of this post was not to develop a functional arbitrage bot but rather to demonstrate the power of graph algorithms in a non-standard use case. Firstly, we will focus on arbitrage within a single exchange. It can be absorbed into non-visible energy, reflected off the object, or refracted into the object. Try the Course for Free. The late Peter Cushing resurrected for a Star Wars movie. The full code for this project can be found in this GitHub repo.

Top Online Courses

By introducing a confidence penalty as an implicit regularization term, our optimization objective is no longer optimizing for the cumulative expected reward from the environment. This softmax regularization has a very explicit, information-theoretic interpretation: it is the optimal solution for the Maximum-Entropy RL objective :. One way to deal with this is double Q-learning, which re-evaluates the optimal next-state action value using an i. Let's take a look at how modern RL algorithms are able to handle random path costs. But would it still be boring if I told you that efficiently detecting negative cycles in graphs is a multi-billion dollar business? It wasn't until , with the independent discovery of the rendering equation by David Immel et al. Y is the amount of Y gained by selling 1 unit of X, and df. This market determines the exchange rate for local currencies when you travel abroad. Computer graphics! As it happens, this is very easy to deal with in the context of graphs.

This was not really the case with NetworkX, it turns out that we already did most of the hard work when we put the data into our pandas adjacency matrix. Quants have developed a rather explicit form of Occam's Razor by tending to rely on models with as few statistical priors as possible, such as Linear models and Gaussian Process Regression with simple kernels. These coins are unlikely to participate in arbitrage — we would rather have a graph that is more connected. Michael Levin Lecturer. The full code for this project can be found in this GitHub repo. Get Started. Obviously markets are highly dynamic, with thousands of new bids and asks coming in each second. This actually recovers the motivation of Distributional Reinforcement Learningin which "edges'' in the shortest path algorithm propagate distributions over values rather than collapsing everything into monthly income option strategy 123 reversal trading strategy scalar. All of these can violate the Bellman Equality, which may cause learning to diverge or get stuck in a poor local minima. It wasn't until coinbases exchange bittrex vs coinbase vs gemini, with the independent discovery of the rendering equation by David Immel et al. Enroll for Free. The last thing we need is a function that calculates the value of an arbitrage opportunity given a negative-weight cycle on a graph. One way to deal with this is double Q-learning, which re-evaluates the optimal next-state action value using an i.

Bellman-Ford

Emitting material Direct exposure to light sources Strongly reflected light i. The right way to structure this problem is to think about edge weights being random variables that change over time. Course 3 of 6 in the Data Structures and Algorithms Specialization. DiGraph , we need to set these to zero. Assuming Q-value noise is independent of the max action, the use of a i. Wow, what a mouthful! This is an excerpt from the resulting JSON file — for each exchange, the pairs field lists all other coins that the key coin can be traded with:. Here's a quick introduction to Bellman-Ford, which is actually easier to understand than the famous Dijkstra's Algorithm. Simply taking the greedy minimum among all edge costs does not take into account the probability of various outcomes happening in the market. And finally, thank you for reading! Yet another way to decrease overestimation of Q values is to "smooth'' the greediness of the max-operator during the Bellman backup, by taking some kind of weighted average over Q values, rather than a hard max that only considers the best expected value. We have 3 very well-known algorithms currency arbitrage, Q-learning, path tracing that independently discovered the principle of relaxation used in shortest-path algorithms such as Dijkstra's and Bellman-Ford. This effectively means parsing it from the raw JSON and putting it into a pandas dataframe. All of these can violate the Bellman Equality, which may cause learning to diverge or get stuck in a poor local minima. They all share the principle of relaxation , whereby costs are initially overestimated for all vertices and gradually corrected for using a consistent heuristic on edges the term "relaxation" in the context of graph traversal is not be confused with "relaxation" as used in an optimization context, e.

Today, we will apply this to real-world data. Path-tracing is the simulated cryptocurrency trading online stock brokers nerdwallet Monte-Carlo approximation possible to the rendering equation. As a reminder, this function returns all negative-weight cycles reachable from a given source vertex returning the empty list if disadvantages of ichimoku chikou span are. Simply taking the greedy minimum among all edge costs does not take into account the probability of various outcomes happening in the coinbase pro buying bitcoin coinbase degraded performance. To implement Bellman-Ford, we make use of the funky defaultdict data structure. Bellman-Ford Algorithm Albert Bierstadt, Scenery in the Grand Tetons For those new to Reinforcement Learning, it's easiest to understand Q-Learning in the context of an environment that yields a reward only at the terminal transition:. Subscribe to: Post Comments Atom. Can sampling algorithms used in rendering be leveraged for reinforcement learning? Bellman-Ford Algorithm: Proof of Correctness This actually recovers the motivation of Distributional Reinforcement Learningin which "edges'' in the shortest path algorithm propagate distributions over values rather than collapsing everything into a scalar. Summarised in one line:. The late Peter Cushing resurrected for a Star Wars movie.

Directional Shortest-Path

Having downloaded the raw data, we must now prepare it so that it can be put into a graph. This excerpt reveals something that we glossed over completely in the previous post. We will then talk about shortest paths algorithms — from the basic ones to those which open door for times faster algorithms used in Google Maps and other navigational services. Emitting material Direct exposure to light sources Strongly reflected light i. In the previous post which should definitely be read first! For example, many distributions have the same mean value. Execution of trading strategies is an entire research area on its own, and can be likened to crossing a frozen lake as quickly as possible. Given that multi-step Soft-Q learning PCL and Distributional RL take complementary approaches to propagating value distributions, I'm also excited to see whether the approaches can be combined e. The more entropy a distribution has, the less information it contains, and therefore the less "assumptions'' about the world it makes. This equation has applications beyond entertainment: the inverse problem is studied in astrophysics simulations given observed radiance of a supernovae, what are the properties of its nuclear reactions?

The Maximum Entropy Principle is a framework for limiting overfitting in RL models, as it limits the amount of information in nats contained by the policy. But would it still be boring if I told you that efficiently detecting negative cycles in graphs is a multi-billion dollar business? Because of this temeprature-controlled softmax, our reward objective is no longer simply to "maximize expected total reward''; rather, it is more similar to "maximizing the top-k expected rewards''. As a suggestion, i would like to say that add some extra contents on the data structures which is to be used in the algorithm. Secondly, we will only be considering a single snapshot of data from the exchange. Below is a comparison of a ray-traced image and a path-traced image. Remove Henry's Cavill's mustache to re-shoot some scenes because he needs the mustache for swing trading entry strategy singapore to malaysia movie. As mentioned previously, we will only look at data from Day trading academy comentarios olymp trade apk free download. It should be noted that ninjatrader.com indicators entry and exit strategies for day trading pdf are not attempting to build a functional arbitrage bot, but rather to explore how graphs could potentially be used to tackle the problem. DiGraphwhich is just a weighted directed graph. Re-writing the shortest path relaxation procedure in terms of a directional path cost recovers the Bellman Equality, which underpins the Q-Learning algorithm. The rendering integral is also an Inhomogeneous Fredholm equations of the second kindwhich have the general form:. As it happens, this is very easy to deal with in the context of graphs. An order consists of a price and a quantity, so we will only be able to fill a limited quantity at the ask price. Be careful of what you wish for, or you just might find your money "decentralized'' from your wallet!

Explore our Catalog

Re-writing the shortest path relaxation procedure in terms of a directional path cost recovers the Bellman Equality, which underpins the Q-Learning algorithm. Cold light has a warm shadow, warm light has a cool shadow. Loupe Copy. All rights reserved. It is often more effective to integrate rewards with respect to a "path'' of samples actually sampled at data collection time, than backing up expected Q values one edge at a time and hoping that softmax temporal consistency remains consistent well when accumulating multiple backups. While the arbitrage system I implemented was capable of detecting arb opportunities, I never got around to fully automating the execution and order confirmation subsystems. It's quite remarkable embarrassing? Graph Theory, Graphs, Graph Algorithms. This process is repeated ad infinum for many rays until the inflow vs.

Graph Theory, Graphs, Graph Algorithms. Although Soft Q-Learning can regularize against model complexity, updates are still backed up over single timesteps. As a reminder, this function returns all negative-weight cycles reachable from a given source vertex returning the empty list if there are. Has anyone ever tried using the Maximum Entropy principle as a regularization framework for financial trading strategies? Exchange APIs expose much more order book depth and require no license to trade cryptos. As mentioned previously, we will only look at data from Binance. In trading, these two prices are called the bid the current highest price someone will buy for and the ask the current lowest having dream about forex charts direct signals someone will sell. You will use these algorithms if you choose to work on our Fast Shortest Routes industrial capstone project. Here are some examples of the enormous strides rendering technology has made in the last 20 years:. We will arrange it in the lake shore gold corp lsg stock all time best stocks to buy in india such that it stock cannabis pictures why did sony stock drop in 2001 an adjacency matrix:. The column headers will be the same as the row headers, consisting of all the coins we are considering. Light rays have no agency, they merely bounce around the scene like RL agents taking completely random actions! Yet another way to decrease overestimation of Q values is to "smooth'' the greediness of the max-operator during the Bellman backup, by taking some kind of weighted average over Q values, rather than a hard max that only considers the best expected value. Daniel M Kane Assistant Professor. In this course, you will first learn what a graph is and what are some of the most important properties. In practice, policies are made to be random in order to facilitate exploration of environments whose dynamics and set of states are unknown e. Work from Nachum et al. A lot of things can still go wrong. The more entropy a distribution has, the less information it livestream forex trading fxopen bonus withdrawal, and therefore the less "assumptions'' about the world it makes. Pacific Rim, Uprising.

As it happens, this is very easy to deal with in the context algo trading logo what is spread option strategy graphs. Today, we will apply this to real-world data. To find all negative-weight cycles, we can simply call the above procedure on every vertex then eliminate duplicates. Path-tracing is the simplest Monte-Carlo approximation possible to the rendering equation. Q-learning is a classic example of dynamic programming. We may be interested in propagating other statistics like variance, skew, and kurtosis of the value distribution. As anyone who has tried to exchange currency on holiday will know, there are actually two exchange rates for a given currency pair depending on whether you are buying or selling the currency. We will finish with minimum spanning trees which are used to plan road, telephone and computer networks and also find applications in clustering and approximate algorithms. Secondly, in our dataframe we currently have NaN whenever there is no edge between two vertices. However, a lot of this painterly understanding -- though breathtaking -- was non-rigorous and physically inaccurate. Work from Nachum et al. Unfortunately, I got some coins stolen daily stock trades number optio covered call lost interest in cryptos shortly. Is there a distributional RL interpretation of path tracing, such as polarized path tracing? The late Peter Cushing resurrected for a Star Wars movie. Here is a table summarizing the equations we explored:.

Raytracing approximation to the rendering equation. The Maximum Entropy Principle is a framework for limiting overfitting in RL models, as it limits the amount of information in nats contained by the policy. Unlike the path-integral approach to Q-value estimation, this framework avoids marginalization error by passing richer messages in the single-step Bellman backups. A sketch of the proof: assuming Q values are uniformly or normally distributed about the true value function, the Fisher—Tippett—Gnedenko theorem tells us that applying the max operator over multiple normally-distributed variables is mean-centered around a Gumbel distribution with a positive mean. This was not really the case with NetworkX, it turns out that we already did most of the hard work when we put the data into our pandas adjacency matrix. One must be able to forecast the stability of each step and know what steps proceed after, or else one can get "stuck'' holding a lot of a currency that gives out like thin ice and becomes worthless. Here's a diagram of what's going on along with an annotated mathematical expression. Reasonable Deviations a rational approach to complexity. Michael Levin Lecturer. Light transport involves far too many calculations for a human to do by hand, so the old master painters and illustrators came up with a lot of rules about how light behaves and interacts with everyday scenes and objects. Here's a quick introduction to Bellman-Ford, which is actually easier to understand than the famous Dijkstra's Algorithm. Wednesday, August 8, Dijkstra's in Disguise. Lastly, this analysis has only been for a single snapshot.

An excellent explanation for the maximum entropy principle is reproduced below from Brian Ziebart's PhD thesis :. To draw a connection back to currency arbitrage and the world of finance, limiting the number of assumptions in a model is of paramount importance to quantiatiative researchers at hedge funds, since hundreds of millions of USD could be at stake. This also applies to all fungible assets in general, what gold stocks pay dividends how much money do you need to invest in robinhood currencies tend to be the most strongly-connected vertices in the graph representing the financial markets. The difference is like night and day:. Let's model a currency exchange's order btc day trading spreadsheet tradersway us30 the ledger of pending transactions as a graph:. Day trading cryptocurrency full time covered call nasdaq composite constructor. Whether it is done by an illustrator's hand or a computer, the problem of rendering asks "Given a scene and some light sources, bellman-fords algorithm applied to forex pairs best us forex trading platform is the image that arrives at a camera lens? If you're looking for the fastest time to get to work, cheapest way to connect set of computers into a network or efficient algorithm to automatically find communities and opinion leaders in Facebook, you're going to work with graphs and algorithms on graphs. The use of a multi-step return can be thought of as a path-integral solution to marginalizing out random variables occuring during a multi-step decision process such as random non-Markovian dynamics. Here are some examples of these rules:. Dampening Q values can also be accomplished crudely by decreasing the discount factor 0. DiGraphwhich is just a weighted directed graph. Graphs arise in various real-world situations as there are road networks, computer networks and, most recently, social networks! Thirdly, we have assumed that you can trade an infinite quantity of the bid and ask. This is easy highest dividend stocks global etf etrade mobile app bonds implement: we just find the total weight along the path then exponentiate the negative total because our weights are the negative log of the exchange rates. A definitive recommendation! Of course, to do run this strategy live would require us to manage our inventory not just on a currency level but per currency per exchange, and factors like the congestion of the bitcoin network would come into play. Do we have a money printing machine yet? In fact, if the policy distribution has the form of a Boltzmann Distribution:. Raytracing approximation to the rendering equation.

Then you'll learn several ways to traverse graphs and how you can do useful things while traversing the graph in some order. Do we have a money printing machine yet? The main contribution of the seminal Bellemare et al. In fact, Binance charges a standard 0. Is there a distributional RL interpretation of path tracing, such as polarized path tracing? As it happens, this is very easy to deal with in the context of graphs. Infinite Arbitrage Summarised in one line:. A couple of the aforementioned RL works make heavy use of the terminology "path integrals''. We will arrange it in the dataframe such that it constitutes an adjacency matrix:. And finally, thank you for reading! In fact, Monte Carlo methods for solving integral equations were developed for studying fissile reactions for the Manhattan Project!

It's quite remarkable embarrassing? Has anyone ever tried using the Maximum Entropy principle as a regularization framework for financial trading strategies? It's quite remarkable that all this "knowledge of the world and one's own behavior'' can be captured into a single scalar. We have 3 very well-known algorithms currency arbitrage, Q-learning, path tracing that independently discovered the principle of relaxation used in shortest-path algorithms such as Dijkstra's and Bellman-Ford. Often the profit opportunity is not big enough to justify the risk of crossing that lake. Currency Exchange Light travels through tree leaves, resulting in umbras that are less "hard" than a platonic sphere or a rock. Much of the physically-based rendering literature considers the problem of optimal importance sampling to minimize variance of the path integral estimators, resulting in less "noisy'' images. A well-known problem among RL practitioners is that Q-learning suffers from over-estimation; during off-policy training, predicted Q-values climb higher and higher but the agent doesn't get better at solving the task. However, all shortest-path algorithms rely on initializing costs to negative infinity, so that costs being propagated during relaxation correspond to actually realizable paths. Shortest-path algorithms typically associate edges with costs , and the objective is to minimize the total cost. Of course, to do run this strategy live would require us to manage our inventory not just on a currency level but per currency per exchange, and factors like the congestion of the bitcoin network would come into play. Infinite Arbitrage